RunCue

I developed Runcue over December of 2025 to while working on various LLM pipelines and knowledge management projects, and found it made development much faster and easier to reason about.

Run interdependent background tasks in parallel from Python without all the infrastructure overhead.

Runcue is a Python library for coordinating work across rate-limited services. Define your tasks, tell runcue when they're ready to run, and let it handle the scheduling and throttling. Dependencies are handled via artifacts, not task completion - this makes it more like a Makefile than a task queue - and therefore easier to build and scale.

What runcue Is

An embedded coordinator for rate-limited work

- Runs in your process (no external services)

- Fully in-memory (no Redis, no database)

- Stateless (your artifacts are the truth)

- Lightweight (just Python)

runcue handles the WHEN. You handle the WHAT.

Example

import runcue

from pathlib import Path

cue = runcue.Cue()

cue.service("api", rate="60/min", concurrent=5)

@cue.task("process", uses="api")

def process(work):

# Your logic here

output = do_work(work.params["input"])

Path(work.params["output"]).write_text(output)

return {"done": True}

@cue.is_ready

def is_ready(work):

return Path(work.params["input"]).exists()

@cue.is_stale

def is_stale(work):

return not Path(work.params["output"]).exists()

async def main():

cue.start()

await cue.submit("process", params={

"input": "data.txt",

"output": "result.txt"

})

await cue.stop()

Simulator

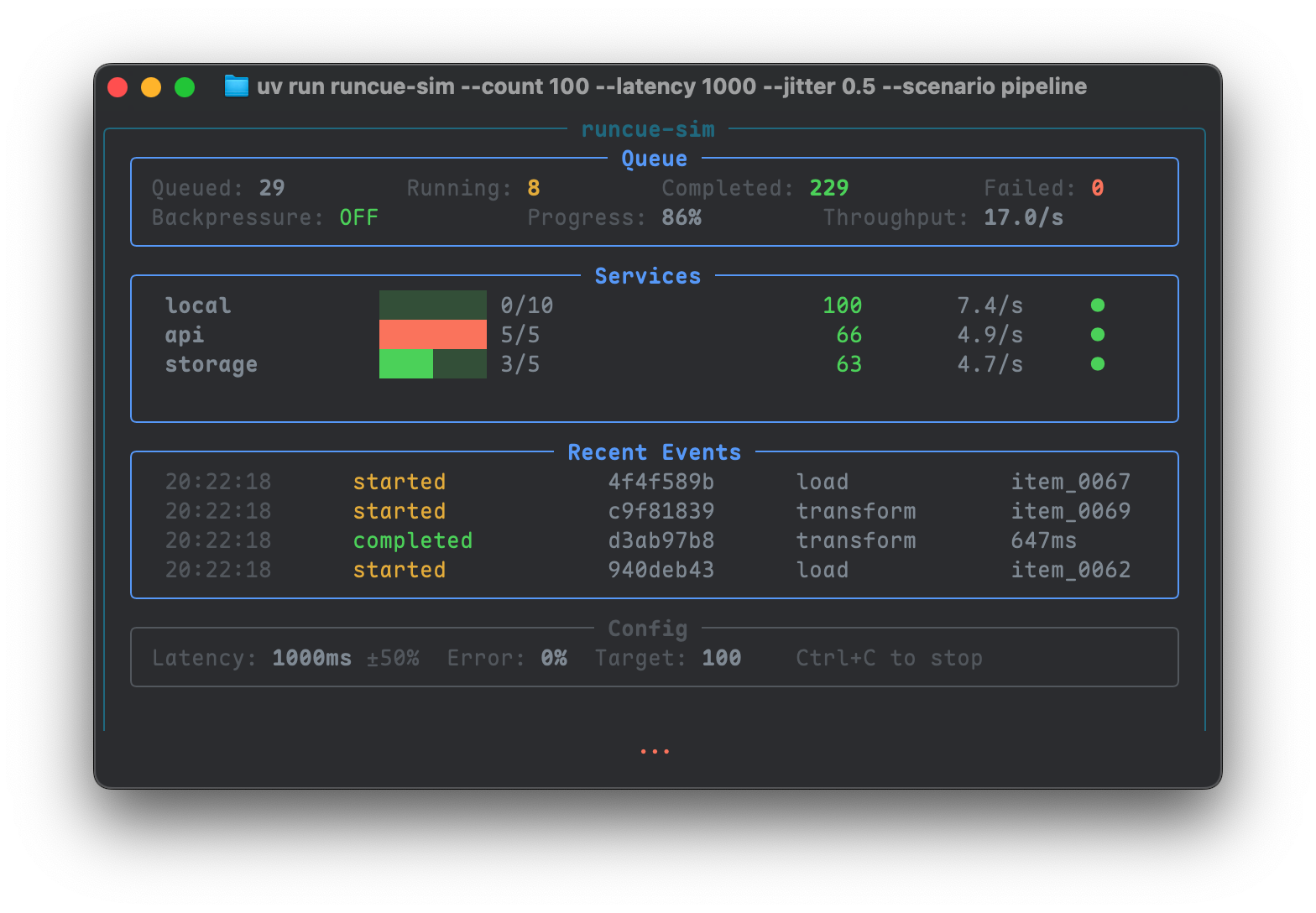

Runcue comes with a sim that includes various scenarios, to stress-test situations like deadlocks and timeouts, and find the max parallel limits of the host.

Mandlebrot Example

The repo has an example that can generate animated gifs using rucue to generate fractals in parallel and then assemble them at the end.